We've written a lot about how network assurance can help your teams become more proactive when troubleshooting network issues. But after 'x' number of articles on the same topic, we get that we might be retreading some very well-trodden ground already.

That's why we decided to take a different approach with this piece. We put Solutions Architect Dan Kelcher on the chaise lounge and took out our notebooks. Let's find out if, and how, IP Fabric could have turned his stories of networking despair into an easy fix.

Who: Dan Kelcher, IP Fabric Solutions Architect, 15+ years of networking experience, Cisco Champion

Where: A Fortune 1000 company with two data centers, a dozen manufacturing and distribution centers, and a strong e-commerce presence.

The situation: The company was integrating their e-commerce and CRM systems using internally developed API services hosted in Azure. Just before the project went live, the application team was working on moving from an internal staging environment to the Azure environment. There was only one issue -the application doesn't work when it's moved to Azure.

Just before the cutover, they opened a high-priority ticket and assigned it to the network team. But the team were unaware of the project, and only one previous member had actually worked with the team to build the Azure environment. Oh yeah, and there was no documentation for the environment either.

After trying to understand the issue, we ascertained that a 500 error returned whenever anyone made an API call. The app owner was certain that this was a network problem. Since the only change was moving to Azure, they believed the error came from a firewall blocking traffic from the data center to Azure. Despite the fact that there was no firewall between the data center and the Azure link. The app team manager demanded packed captures to prove it wasn't a firewall issue.

These packet captures showed traffic egressing the network towards Azure and the corresponding return traffic. Nothing was being blocked or rewritten. It took the better half of a day to finally agree it wasn't a network problem. This agreement came after the API connection was tested using cURL commands that established that the CRM app was making the API call.

The Solution: We finally found out that, in Azure, different TLS version options are accepted. It had been configured to only accept TLS 1.2, but the application used TLS 1.1. After we allowed TLS 1.1 connections in Azure, the CRM connection worked.

First off, the network team had to prove beyond reasonable doubt that the issue wasn't a network problem, but they lacked the tools and documentation to do so. This inevitably led to delays.

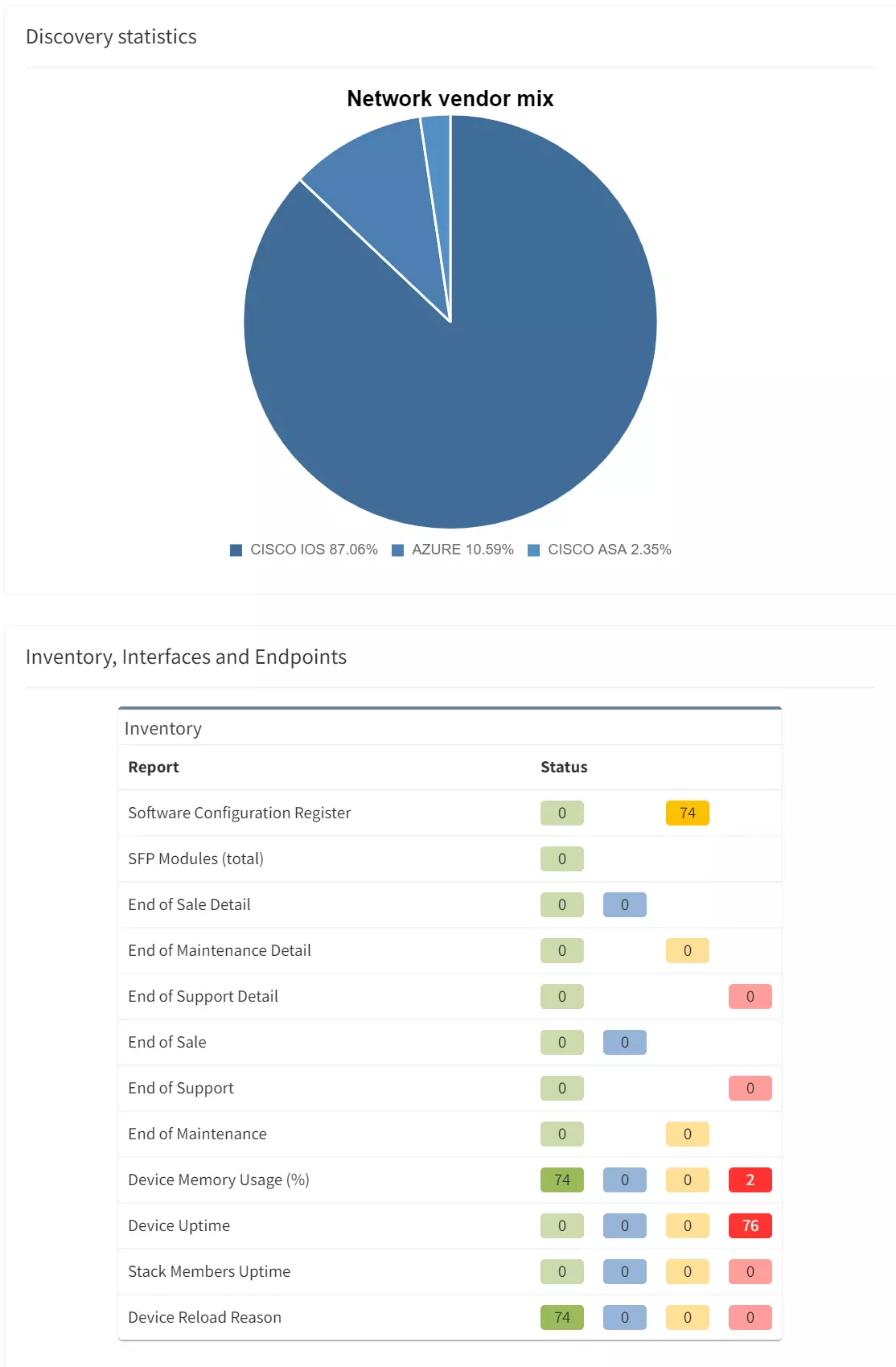

Topological diagrams would have provided insight into the Azure connection that the network team simply didn't have:

A network assurance solution like IP Fabric would have saved crucial hours and avoided jeopardizing the migration. Assurance would have enabled the relevant teams to proactively work on the issue without needing the network to prove its innocence.

Where: A North American financial institution, acquiring another company based in Europe, with a presence in Asia

The situation: The three companies in different continents maintained their own network and security standards. Security standards were implemented differently across each unit, so the networks couldn't be merged until they met combined standards. A solution was architected so that two businesses could connect networks, but this was limited to approved traffic only. Firewalls were deployed to two data centers in the UK, configured to filter traffic between the networks.

Each company maintained their own MPLS network, as well as a shared MPLS network for network management. This allowed them to implement secure segmentation without slowing down network operations.

Due to a strict change management process, minor and cosmetic changes were often ignored. This led to device configuration issues, interfaces with incorrect descriptions, misspelled policy names and more. As time went by, more and more apps were shared between the networks, which meant more rule creation to allow traffic.

One day, an architect noticed that the support team had been adding routes to the management side of the link. This meant that entire subnets were routed directly to the other side when they should have been routed to a firewall with an ACL that only permitted specific destinations, ports and protocols.

The network team started looking into these rules. They established that when rules were added that routed traffic to firewalls, traffic was still being blocked. The issue was made worse by a lack of documentation around firewall creation. The people who initially deployed them had already left the company. To get around this, we looked at mock connections in a lab environment to see if the error could be reproduced. We looked at backup configs, drew out all devices, links etc., and we found the issue immediately.

The solution: Traffic went from the North American (NA) offices to the ISP’s NA MPLS cloud, which then connected to ISP’s EU MPLS cloud, and from there to the UK datacenters. There were a pair of switches connected to the MPLS, and they had two VRFs configured, one from the US, and one from EU/APAC. Each VRF was connected to a firewall controlling access between both sides. The traffic would then egress from the EU VRF and go into the ISP’s EU MPLS.

To dig into how BGP works a little, there’s a system used to identify if routing loops exist. It looks at the AS PATH of a learned route, and if it matches the AS number that’s configured on the router, it believes there’s a loop and drops that route advertisement.

The route advertised from NA, egressing from the EU VRF contained the same AS number of the EU MPLS. Therefore, the advertisement was dropped and was never passed through to the rest of the EU/APAC network. The fix was simple. On the VRFs we configured BGP to strip out the repeated AS and replaced it with a different number, which resolved the routing issue.

The network simply wasn't functioning as intended. With IP Fabric in place, the teams could have used intent rules and path verification to identify if rules were allowing traffic to bypass the firewall.

Topology diagrams and path traces could have been used to determine the correct interfaces instead of relying on guess work. All of the information needed to identify and resolve the issue was already known within the network configuration and state information. But this wasn't immediately accessible. Using simple web interfaces, or via API, the team could have better understood their environment, and shared the data with whoever needed it, without hours of detective work.

IP Fabric could have also helped the security team out. With IP Fabric, the security team could run path traces and configure intent rules to validate whether network configurations matched what was required to maintain compliance. Being able to run daily compliance checks after change windows (or during the change window), would have given them the assurance they needed that changes weren't creating additional risk.

All in all, these two stories are perfect examples of how network assurance can give teams the data they need to proactively avoid errors, misconfigurations and misalignments from impacting network performance and service availability. In other words, network assurance could have helped these teams become more resilient, and agile in the face of these unexpected and potentially catastrophic events.

But we get it. Hindsight is 20/20.

Why not find out how IP Fabric can help you become more proactive when troubleshooting network issues? Take our free, DIY demo for a spin to see if Network Assurance is right for you here. Make sure to follow us on LinkedIn, and on our blog, where we regularly publish new content.

Will you be at Cisco Live 2023 in Las Vegas? Let us know, and book some time with a member of our amazing team!

We've written a lot about how network assurance can help your teams become more proactive when troubleshooting network issues. But after 'x' number of articles on the same topic, we get that we might be retreading some very well-trodden ground already.

That's why we decided to take a different approach with this piece. We put Solutions Architect Dan Kelcher on the chaise lounge and took out our notebooks. Let's find out if, and how, IP Fabric could have turned his stories of networking despair into an easy fix.

Who: Dan Kelcher, IP Fabric Solutions Architect, 15+ years of networking experience, Cisco Champion

Where: A Fortune 1000 company with two data centers, a dozen manufacturing and distribution centers, and a strong e-commerce presence.

The situation: The company was integrating their e-commerce and CRM systems using internally developed API services hosted in Azure. Just before the project went live, the application team was working on moving from an internal staging environment to the Azure environment. There was only one issue -the application doesn't work when it's moved to Azure.

Just before the cutover, they opened a high-priority ticket and assigned it to the network team. But the team were unaware of the project, and only one previous member had actually worked with the team to build the Azure environment. Oh yeah, and there was no documentation for the environment either.

After trying to understand the issue, we ascertained that a 500 error returned whenever anyone made an API call. The app owner was certain that this was a network problem. Since the only change was moving to Azure, they believed the error came from a firewall blocking traffic from the data center to Azure. Despite the fact that there was no firewall between the data center and the Azure link. The app team manager demanded packed captures to prove it wasn't a firewall issue.

These packet captures showed traffic egressing the network towards Azure and the corresponding return traffic. Nothing was being blocked or rewritten. It took the better half of a day to finally agree it wasn't a network problem. This agreement came after the API connection was tested using cURL commands that established that the CRM app was making the API call.

The Solution: We finally found out that, in Azure, different TLS version options are accepted. It had been configured to only accept TLS 1.2, but the application used TLS 1.1. After we allowed TLS 1.1 connections in Azure, the CRM connection worked.

First off, the network team had to prove beyond reasonable doubt that the issue wasn't a network problem, but they lacked the tools and documentation to do so. This inevitably led to delays.

Topological diagrams would have provided insight into the Azure connection that the network team simply didn't have:

A network assurance solution like IP Fabric would have saved crucial hours and avoided jeopardizing the migration. Assurance would have enabled the relevant teams to proactively work on the issue without needing the network to prove its innocence.

Where: A North American financial institution, acquiring another company based in Europe, with a presence in Asia

The situation: The three companies in different continents maintained their own network and security standards. Security standards were implemented differently across each unit, so the networks couldn't be merged until they met combined standards. A solution was architected so that two businesses could connect networks, but this was limited to approved traffic only. Firewalls were deployed to two data centers in the UK, configured to filter traffic between the networks.

Each company maintained their own MPLS network, as well as a shared MPLS network for network management. This allowed them to implement secure segmentation without slowing down network operations.

Due to a strict change management process, minor and cosmetic changes were often ignored. This led to device configuration issues, interfaces with incorrect descriptions, misspelled policy names and more. As time went by, more and more apps were shared between the networks, which meant more rule creation to allow traffic.

One day, an architect noticed that the support team had been adding routes to the management side of the link. This meant that entire subnets were routed directly to the other side when they should have been routed to a firewall with an ACL that only permitted specific destinations, ports and protocols.

The network team started looking into these rules. They established that when rules were added that routed traffic to firewalls, traffic was still being blocked. The issue was made worse by a lack of documentation around firewall creation. The people who initially deployed them had already left the company. To get around this, we looked at mock connections in a lab environment to see if the error could be reproduced. We looked at backup configs, drew out all devices, links etc., and we found the issue immediately.

The solution: Traffic went from the North American (NA) offices to the ISP’s NA MPLS cloud, which then connected to ISP’s EU MPLS cloud, and from there to the UK datacenters. There were a pair of switches connected to the MPLS, and they had two VRFs configured, one from the US, and one from EU/APAC. Each VRF was connected to a firewall controlling access between both sides. The traffic would then egress from the EU VRF and go into the ISP’s EU MPLS.

To dig into how BGP works a little, there’s a system used to identify if routing loops exist. It looks at the AS PATH of a learned route, and if it matches the AS number that’s configured on the router, it believes there’s a loop and drops that route advertisement.

The route advertised from NA, egressing from the EU VRF contained the same AS number of the EU MPLS. Therefore, the advertisement was dropped and was never passed through to the rest of the EU/APAC network. The fix was simple. On the VRFs we configured BGP to strip out the repeated AS and replaced it with a different number, which resolved the routing issue.

The network simply wasn't functioning as intended. With IP Fabric in place, the teams could have used intent rules and path verification to identify if rules were allowing traffic to bypass the firewall.

Topology diagrams and path traces could have been used to determine the correct interfaces instead of relying on guess work. All of the information needed to identify and resolve the issue was already known within the network configuration and state information. But this wasn't immediately accessible. Using simple web interfaces, or via API, the team could have better understood their environment, and shared the data with whoever needed it, without hours of detective work.

IP Fabric could have also helped the security team out. With IP Fabric, the security team could run path traces and configure intent rules to validate whether network configurations matched what was required to maintain compliance. Being able to run daily compliance checks after change windows (or during the change window), would have given them the assurance they needed that changes weren't creating additional risk.

All in all, these two stories are perfect examples of how network assurance can give teams the data they need to proactively avoid errors, misconfigurations and misalignments from impacting network performance and service availability. In other words, network assurance could have helped these teams become more resilient, and agile in the face of these unexpected and potentially catastrophic events.

But we get it. Hindsight is 20/20.

Why not find out how IP Fabric can help you become more proactive when troubleshooting network issues? Take our free, DIY demo for a spin to see if Network Assurance is right for you here. Make sure to follow us on LinkedIn, and on our blog, where we regularly publish new content.

Will you be at Cisco Live 2023 in Las Vegas? Let us know, and book some time with a member of our amazing team!